Web scraping has been observed over the years to be growing in relevance with the process showing the useful application in any business that needs to make the best decisions.

It is the method used in gathering data in large amounts, and it seems to be continually useful; however, it is also easily stoppable with different tools and technologies geared toward stopping web scrapers from accessing content on servers.

These challenges can be intimidating and can even dissuade those looking for how to extract data from websites for the first time.

But there are also several methods of going around these problems and overcoming these challenges. And in this article, we will focus on describing 9 of the best methods.

Table of Contents

What Web Scraping Is

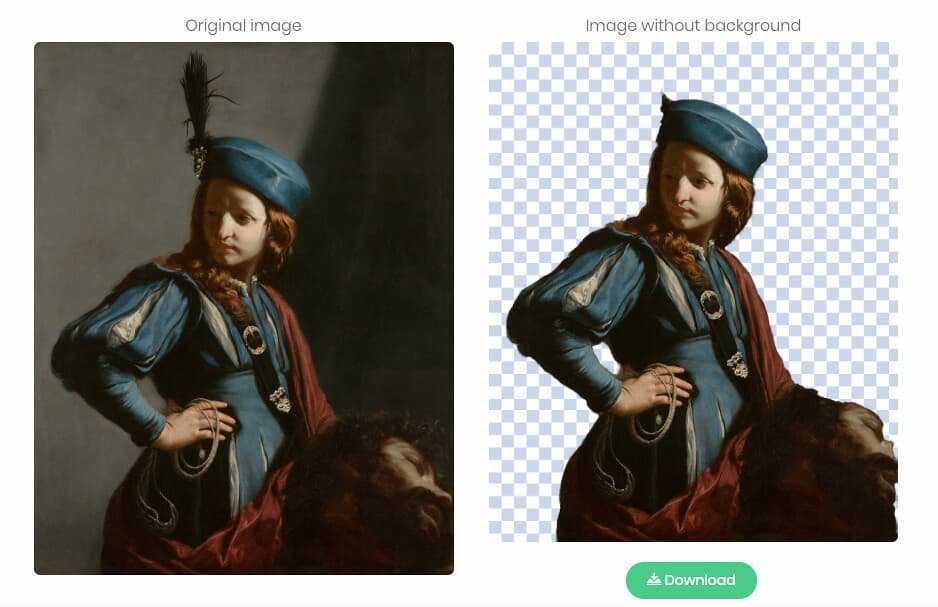

Web scraping can be defined as the automated method found to be most effective for collecting large amounts of data from different sources on the internet.

The use of tools such as proxies and web scrapers helps make this form of data extraction safe, anonymous, and efficient.

The data can be collected while the user stays hidden, but most importantly, it can be collected quickly and in real time. This makes the data more relevant and useful as it represents market conditions at that exact moment.

Why Is Web Scraping Growing In Relevance?

Web scraping has been popular for several years now, mostly because of how easy it makes data available for businesses just when they need it. There are different ways that brands can utilize web scraping, and below are some of the most common:

1. Investment Decision Making

Businesses and individuals are always making investments, and these investments are often made to generate gains and profits.

Smart decisions lead to profitable investments, while the uninformed ones lead to irrecoverable losses.

Web scraping is usually used to collect enough data to help make better investments that lead to the best profits.

2. Sentiment and Market Analysis

Companies need to monitor and analyze consumer behavior and sentiments and the general outlook of the market.

Understanding buyers’ sentiments help the brand produce better products and services and provide better customer satisfaction.

And knowing what the market says can influence what a brand makes and how they approach any given market.

3. Business Intelligence

In a world with so much competition in every field and industry, it is businesses with insights and intelligence that dominate and rule the market.

Business intelligence can range from setting dynamic pricing to fully understanding trends and performing product optimization, and web scraping provides a brand with just enough data to do all these.

4. MAP Monitoring and Compliance

Minimum Advertised Price or MAP is often the price agreed by manufacturers and retailers to sell a particular product across different markets.

The agreement helps prevent retailers from manipulating prices to suit their needs or putting their competitors at a disadvantage.

And it is important to monitor MAP to ensure that some retailers are not toying with it to win more market shares.

9 Tips for Alleviating the Automated Data Gathering Process

Web scraping may be challenging, but with the right combination of tools and tips, you should have no trouble getting the data you need from any server at any given time.

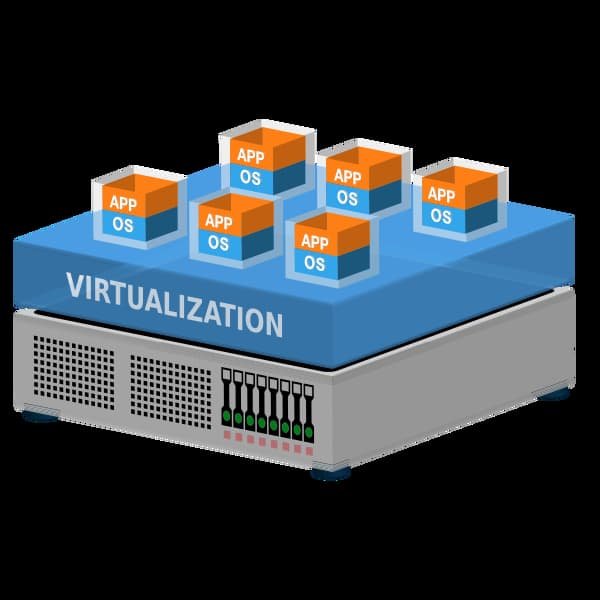

1. Use Proxies

Proxies are specialized tools that can mediate connections and stand between you and the internet.

They are fondly known for keeping the user safe and anonymous during web activities, but they can also help bypass several web scraping issues.

For instance, issues such as IP blocking and geo-restrictions can easily be treated using proxies since they supply you with multiple IPs to choose and switch from.

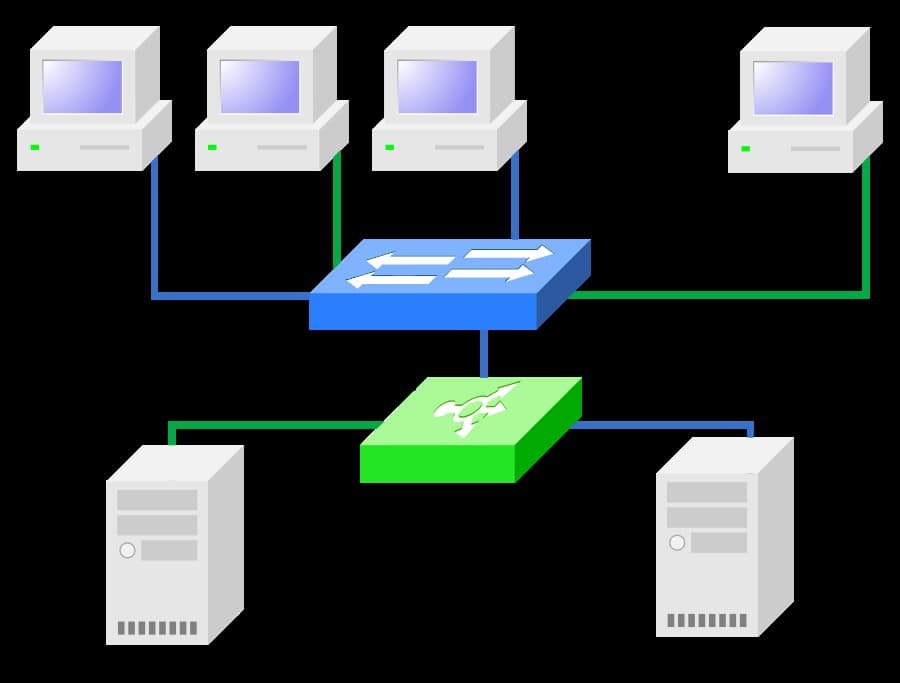

2. Always Rotate IPs

Using rotating IP addresses is also another way to stay alive during web scraping. This is because these types of IPs hardly repeat themselves, and each new request is made using a different IP which causes the server to believe the request is from a new user making it harder to get banned during scraping.

3. Never Repeat Patterns

Patterns are easy to create as we all like to find the most comfortable ways of getting things done, but they are also easy to spot and tied to a user.

Some patterns to avoid include crawling patterns and interval patterns. Instead, you want to crawl with a different approach each time and set random intervals to prevent the servers from understanding that it is you making repeated requests.

4. Always Consult the Robots.txt Files

Every website has a robots.txt file that spells how it should be scraped and whether or not scraping is allowed.

They define these parameters to help keep things legal, and breaking them would be a huge violation that can prompt a permanent block.

5. Avoid Honeypot Traps

Honeypot traps are special links invisible to the human eye but visible to the scraping bot.

Clicking or following a honeypot link gives you away as a bot and prompts an immediate ban.

6. Reduce the Speed

Sometimes, the problem could be how fast you scrape a website. Websites and servers run on bandwidth, and frequently scraping their content with unholy speeds can cause them to crash. To avoid this, servers are always on the lookout for users that scrape too quickly and block them once they are identified.

7. Schedule Scraping for Off-peak Hours

Scraping should be done during off-peak hours when the loads on the servers are less. During peak hours, there are often too many legitimate users sending requests to the server, and the system works extra to block bots and make room for a human user.

This is why it is advisable to wait until all the real users are gone and the servers are less busy before web scraping.

8. Use Headless Browsers

A headless browser has no graphical user interface and is often easier to use for web scraping. Additionally, it can easily load and navigate JavaScript elements and behaves like a human user making it almost impossible for websites to block it.

9. Use Scraper APIs

Our final tip would be to use a scraper API. These are interfaces built to connect a user directly to a program or system.

They exist as a direct link making it unlikely that the program or application would block them since they support the connection and even allow it.

Conclusion

Web scraping is useful but can also be easily challenging. However, you do not need to fear these challenges if you are looking for how to extract data from the website as a newbie. Visit this page to learn more about web data extraction.

Following the tips provided above should help you easily overcome the most common challenges and get you to the data you need.

Read also: A Website Updated Daily Could Be Considered

0