Table of Contents

Key Takeaways

- The crucial role of persistent volumes for stateful applications in Kubernetes.

- Guide to setting up and managing Kubernetes volumes effectively.

- Best practices in selecting and using Kubernetes-supported storage solutions.

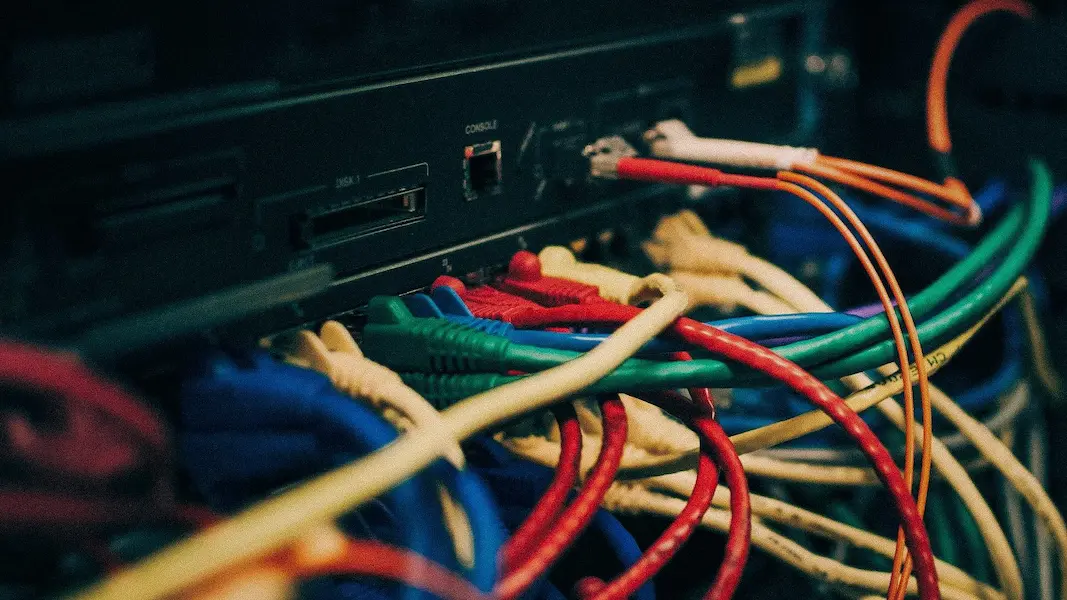

Understanding the Role of Persistent Volumes in Kubernetes

Whether you’re starting with Kubernetes or scaling up your enterprise applications, understanding the concept of persistent storage is critical. Kubernetes volumes are a powerful feature that ensures data persistence beyond individual pods’ lives. Without this capability, the data stored within a pod would be irrevocably lost when it shuts down or crashes – a dire scenario for applications that rely on long-term data retention, such as databases and content management systems. Introducing Persistent Volume Claims (PVCs) in Kubernetes abstracts the storage underpinning away from the details of individual pod management. Developers define their storage needs via a PVC, and the Kubernetes system binds this claim to a persistent volume. It is beneficial as it shields developers from the complexity of the underlying storage infrastructure, and it’s one of the reasons why Kubernetes is such a powerful tool for developers and operators.

Persistent volumes are invaluable for businesses running critical applications that handle sensitive or necessary data. They provide a reliable method to ensure data persistence across pod reallocations in a Kubernetes cluster. This characteristic is the cornerstone of cloud-native ecosystems, emphasizing the importance of Kubernetes in today’s IT infrastructure and the need for skilled professionals who understand the nuances of working with Kubernetes volumes.

Setting Up Persistent Volumes: A Step-by-Step Guide

The journey from recognizing the need for persistent storage to effectively implementing it in Kubernetes can be complex. The first step to setting up a persistent volume involves defining it in Kubernetes – this includes specifying the size of the volume, its access mode, and the actual storage backend it will utilize. Whether you opt for manual provisioning, indicating a specific storage asset, or dynamic provisioning, leveraging a StorageClass to automate the binding process, the right choice depends significantly on the particular requirements of your workload and the governance policies in place.

Once configured, the persistent volume must be integrated with the Kubernetes cluster. The critical element to success here is ensuring that the access modes and storage classes match the application’s needs. The storage solution must also support the necessary access policies if your application demands simultaneous access from multiple pods. It’s about establishing a seamless match between workload demands and storage capabilities. However, it’s not just about getting the persistent volume up and running; it’s also about managing it responsibly. Security considerations cannot be an afterthought. It involves defining and enforcing access policies that ensure only specified pods can mount a volume, maintaining data integrity, and potentially encrypting sensitive information to protect it from unauthorized access. These are non-negotiable aspects of managing Kubernetes volumes and are vital to sustaining a secure and resilient cloud-native environment.

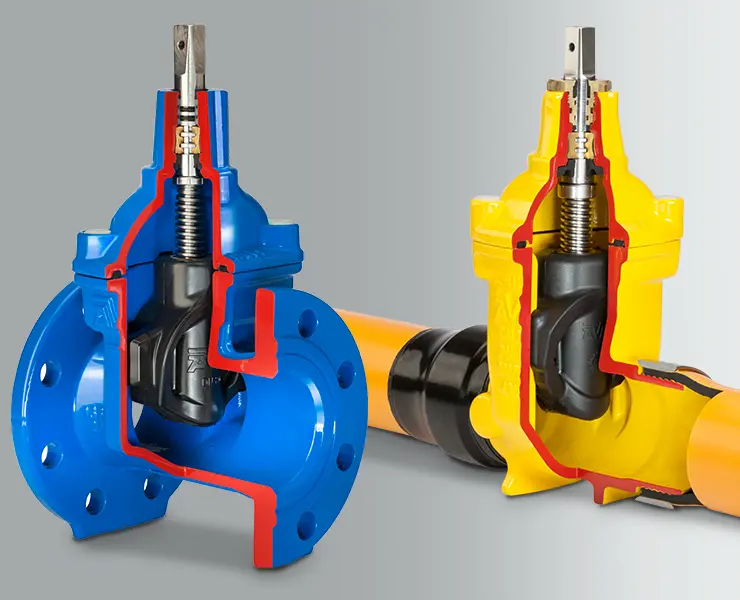

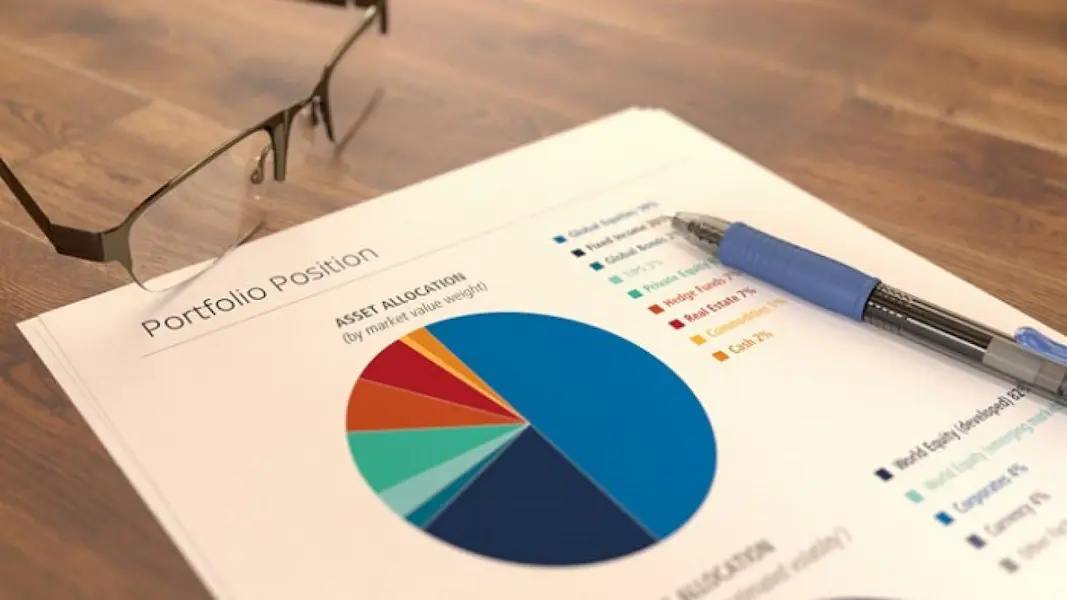

Comparing Kubernetes-Supported Storage Solutions

One size does not fit all when choosing a storage solution within the Kubernetes ecosystem. The decision is nuanced and multifaceted and is influenced by factors such as the specific demands of the application, budget constraints, and performance considerations. Local storage may be viable for small, standalone clusters, but more robust, networked storage solutions are necessary for more extensive, distributed environments. Many options are available from cloud service providers, such as file systems and block storage, each with resilience, scalability, performance, and ease of administration benefits. Dynamic provisioning is another aspect that Kubernetes offers a significant advantage over others. By defining Storage Classes, administrators can create templates that enable automatic volume provisioning based on specific requirements such as performance, data redundancy, and resilience. Understanding and effectively utilizing Storage Classes can significantly streamline the deployment and management of storage within your Kubernetes clusters.

Cost is another vital component in the selection process. While a particular storage solution may offer high throughput and low latency, it could come at a prohibitive price. Evaluating different storage options involves balancing meeting technical requirements and staying within budgetary limits. Thus, when comparing Kubernetes-supported storage solutions, it’s essential to consider the total cost of ownership, including ingress and egress costs, to make an informed decision that aligns with both technical and financial objectives.

Overcoming Data Persistence Challenges in Kubernetes

Running stateful applications on Kubernetes introduces a unique set of challenges, as the system’s dynamic and distributed nature can complicate data persistence. For instance, if not correctly managed, server failures or pod migrations can disrupt access to persistent storage. As such, Kubernetes administrators must employ strategies that account for these situations, such as replicating states across multiple nodes or geographic regions, offering redundancy, and promoting resilience. But technical safeguards alone aren’t enough; the human element of planning and foresight is just as critical. Regularly backing up data, validating recovery paths, and auditing the system for potential vulnerabilities are all crucial steps in establishing a solid defense against data loss. It’s about creating a culture of preparedness that can withstand the inevitable hiccups in any complex system.

While the framework provided by Kubernetes is sturdy, the wisdom of the community further strengthens it. It’s common to seek success stories or cautionary tales from peers who have navigated similar challenges. Doing so lets you learn how to approach common issues and integrate those solutions into your Kubernetes deployments, improving your system’s overall data persistence strategies. Learning from the collective experience can smooth out the learning curve and promote the adoption of best practices across the spectrum of Kubernetes users.

Seamless Integration of Stateful Applications with Kubernetes Volumes

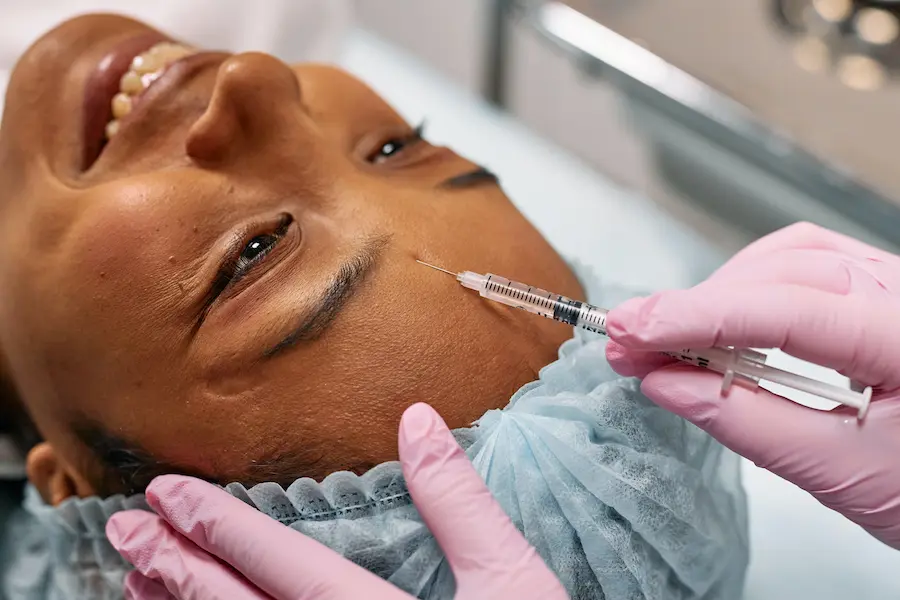

Cloud-native computing demands agility and adaptability, which shine through when integrating stateful applications with Kubernetes. These applications bring their requirements, often demanding consistent disk state no matter where or how they operate within the cluster. Developers working with stateful applications must be mindful of how their applications read from and write to disk, ensuring that these actions are compatible with Kubernetes’s distributed, dynamic nature.

Encouraging good development practices is part and parcel of this integration process. Designing applications to be ‘state-aware’ — understanding how to handle disruptions and maintain consistency without relying on persistent storage for all processes — becomes crucial. By doing so, applications can leverage Kubernetes volumes for the absolute necessities, harnessing their full potential without creating a heavy dependency.

In practice, many organizations run commonly used stateful workloads and extensively use its persistent volume feature. These workloads benefit from Kubernetes’ scalability and fault-tolerance capabilities, and their success stories further validate Kubernetes’ proposition as an ecosystem capable of supporting a wide range of applications with different persistence demands.

Monitoring and Optimizing the Performance of Persistent Volumes

Vigilance in monitoring is not just a best practice; it’s necessary, especially when managing persistent volumes in a live Kubernetes environment. By closely monitoring several metrics, you can identify possible problems before they become more significant. For instance, closely monitoring IOPS and throughput can alert you to storage bottlenecks, enabling proactive intervention. Equally important is the careful tracking of capacity utilization, which can prevent unexpected outages due to volume exhaustion.

When performance issues arise, a systematic approach is needed to resolve them. This might involve reviewing recent environmental changes, analyzing performance over time, or performing diagnostic tests to pinpoint the root cause. Effective troubleshooting often encompasses examining various system components to identify whether the performance issue is isolated or part of a more significant systemic problem.

Thankfully, Kubernetes users can access many tools designed to help monitor and optimize storage performance. Implementing these tools can provide invaluable insights into the system’s health and efficiency, enabling data-driven decisions about scaling and tuning your persistent volume configurations for optimal performance.

Automating Persistent Volume Operations in Kubernetes

As Kubernetes environments grow, so too does the complexity of managing them. Automating operations around persistent volumes can significantly reduce this complexity, improving consistency and scalability. Automation in Kubernetes is achieved by codifying resource definitions and management tasks, allowing for both rapid provisioning of storage resources and efficient lifecycle management.

Kubernetes operators extend this automation further, enabling sophisticated control of volume lifecycle events such as provisioning, snapshotting, and resizing. These operators work within the Kubernetes API to perform operations traditionally executed manually, streamlining complex tasks into manageable workflows.

Ultimately, organizations can unlock higher efficiency and accuracy in their Kubernetes operations by embracing automation and implementing operators for volume management. Adopting these practices underlines a commitment to forward-thinking management strategies, reflecting a deeper understanding of Kubernetes’ capabilities.

Future Trends in Kubernetes Storage and Persistent Volumes

The landscape of Kubernetes storage is constantly changing, driven by technological advancements and organizations’ changing needs. The focus is on cloud-native storage solutions that bring scalability, performance, and agility to the forefront. These solutions are tailored to containerized environments’ fast-paced, dynamic nature and offer new ways to approach data management within Kubernetes.

The platform’s inherent flexibility facilitates the integration of these cloud-native storage options into Kubernetes. As such, they can be seamlessly plugged into existing systems, enabling organizations to take advantage of cutting-edge capabilities without major overhauls to their infrastructure.

As technology progresses, the community around Kubernetes plays a pivotal role, driving innovation through shared knowledge and experience. This collaborative spirit continues to push the boundaries, fostering an environment where new solutions can be trialed and best practices can be shared. As Kubernetes evolves, the willingness to adapt, evolve, and learn together will shape the future of how persistent data is managed and utilized in cloud-native computing.

0